Event-Driven Systems - Where to start?

Many organizations are moving to event-driven architectures. The motivations for doing so can vary, but most often, it boils down to the desire to have a distributed system with loosely coupled components.

At least, that's from an architectural perspective. Securing financing for such an endeavor requires it to deliver business value, which typically comes in the form of:

- Speed to production - The modernization will introduce a level of autonomy and reduced friction for teams working on delivering products to production.

- Growth - During periods of growth, hiring for older technologies can be challenging.

- Scalability - The existing technical architecture may not be able to scale to meet the needs of the business.

- Technical debt - Systems have become so unmanageable that a rewrite is considered the only way forward.

- Cost saving - The organization has identified significant cost savings from the new architecture.

Where to start

Assuming all stakeholders are on board with the new architecture, a strategy for defining events should be formalized.

Events as first-class citizens

Formalizing the strategy is critical, because defining events is not just the responsibility of development teams. Development teams will often define events in isolation, essentially rolling the dice when deciding on pivotal events to capture. Even when teams consist of individuals with extensive domain knowledge, re-architecting a system affords an organization a fresh look at their current business and how the new architecture will stack up against it. In this context, not involving business stakeholders in the definition of events will most likely result in a suboptimal solution.

Currently, the most effective way to involve the business in such decisions is through event storming workshops. If other strategies are used, they must provide a systematic approach to walking through the behaviour of a system, both existing and desired. In addition, they must do so in a collaborative environment.

Technical architecture

Once a strategy has been defined for identifying events, we need to decide on what will emit these events and how they will be reacted to. There are many options here, including:

- Using a cloud provider to generate and deliver events.

- Using event sourcing with an associated library like Axon or Eventuate.

- Using Change Data Capture.

Cloud Provider

If deploying workloads to a cloud provider, organizations can decide to use the cloud-provided event infrastructure. AWS provides EventBridge while Google Cloud and Azure offer Cloud Pub/Sub and Event Grid respectively.

Event Sourcing

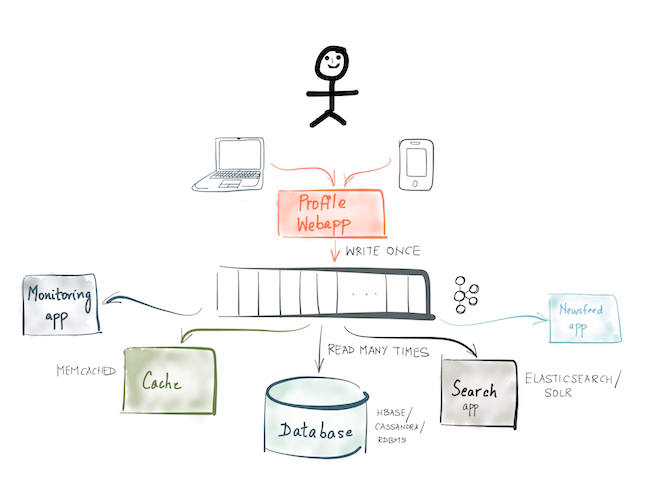

Event sourcing is one of the most natural ways to design event-driven systems. This model can provide immense flexibility in how an organization stores and views its data. The basic premise is that each modified action in a system is written to an append-only event log. These entries are immutable - they represent exactly what happened in the system and are never modified. This raw form of data is the source of truth. Any component interested in these events can subscribe to the log and act on the data accordingly. A database is therefore just another subscriber to the event log.

This has interesting implications. A common pain point in designing relational database tables is predicting how clients will query those tables. A best-guess is needed at the design stage, while indexes can be added later to accommodate different types of reads. However, indexes can impact write throughput, so finding the right balance can be challenging. With an event-sourced model, we can focus on capturing raw data and address how the data will be queried at a later time.

As requirements emerge that necessitate different views of the raw data, we can create new tables optimized for those use cases. We can then easily subscribe to the event log to populate these new tables. Moreover, we can do this after the fact, which means we will receive all the raw data from the point at which we began capturing it, even if we created our read model much later.

When adopting this model, it's crucial to obtain buy-in from those who work closely with the data. Depending on the chosen architecture, events might be physically located somewhere other than the database people are used to. This data requires the same level of protection and backup as any other data, so if this can't be arranged, then event sourcing is not a workable solution.

If, on the other hand, a decision is made to move the event log into the current database, there will be multiple copies of the data – the raw data and a number of read models. This might cause concern for database administrators, although it's worth noting that the read models are read-only. The latency required to update the read models should be negligible in most cases, which should alleviate any data inconsistency concerns.

Some examples of popular event sourcing frameworks include:

- Akka Persistence

- Axon Server

- EventStore

- Eventuate

Change Data Capture

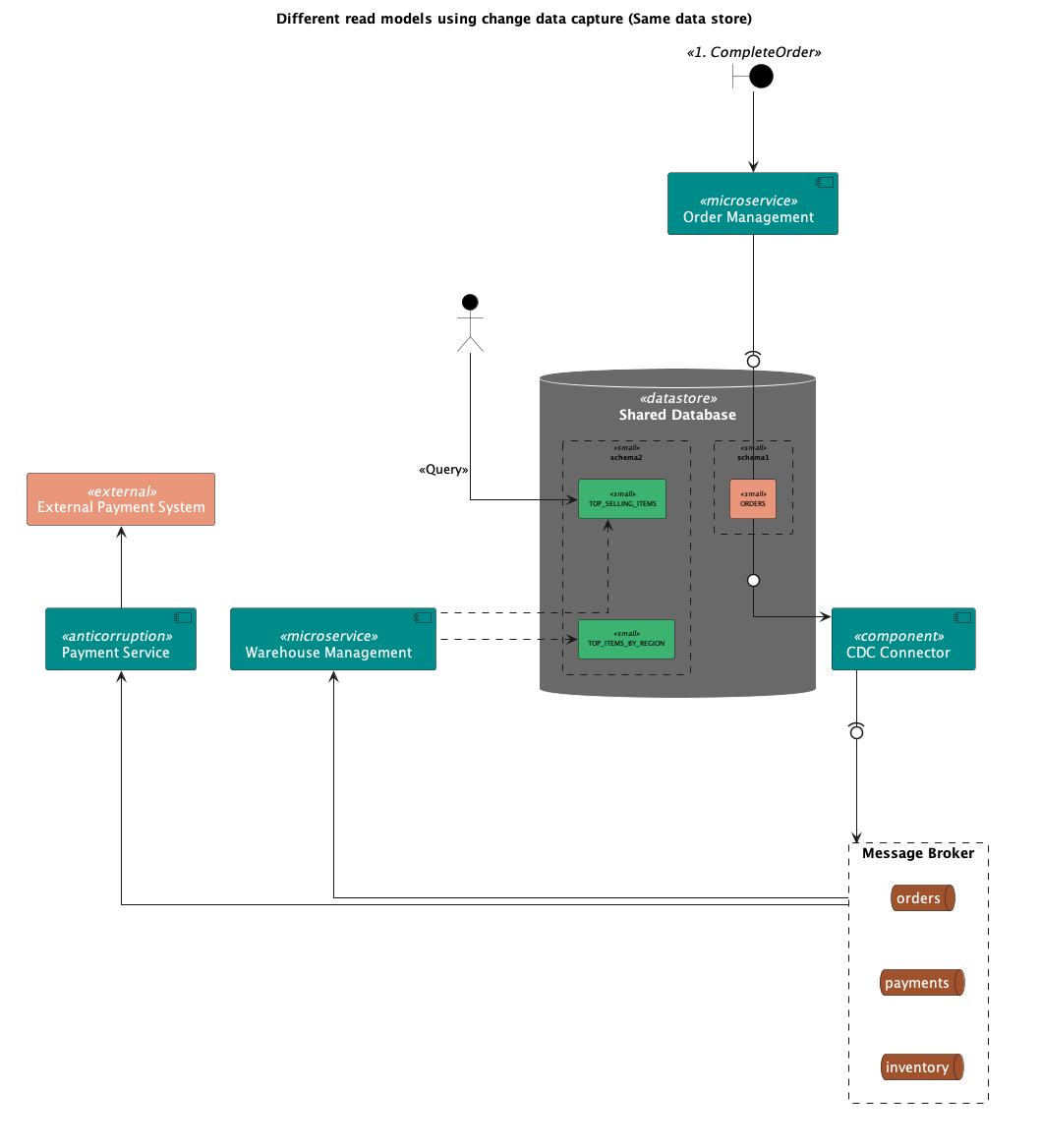

The shift to event sourcing can be overwhelming for many organizations, particularly those attempting to migrate mature legacy systems with a shared database model. For these organizations, a less disruptive alternative exists in Change Data Capture (CDC). CDC involves capturing database changes and propagating these changes to downstream system components using messaging middleware. With this approach, we maintain the original database as the source of truth, but listen for changes of interest in order to transform them into events. Events typically get propagated to an event bus and delivered to interested parties.

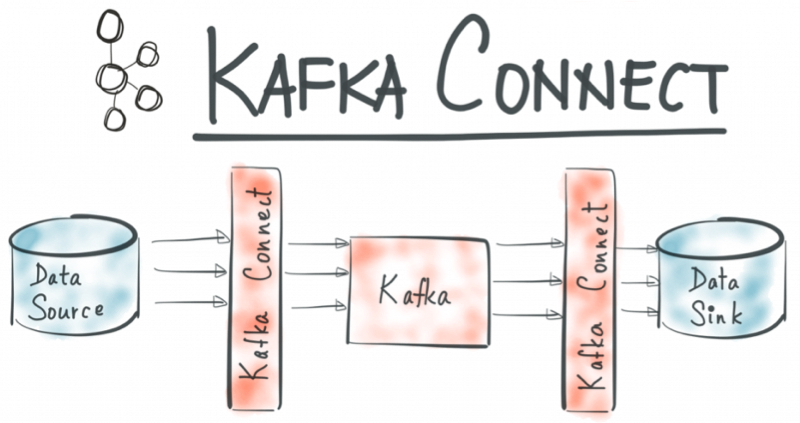

Kafka Connect has excellent support for CDC, offering multiple ways to listen for database changes. From an operational standpoint, it's best to avoid adding database triggers each time a new event is defined. Kafka Connect also provides a mechanism to listen for changes at the database log level. This way, as each new event is identified, we can add a mechanism to filter such events and transform them accordingly for use in downstream systems.

CDC also provides organizations with an opportunity to begin building separate components that react to these events, laying the foundation for a distributed systems architecture.

Having the read models updated in the same database might seem unusual, but for organizations with a multi-year strategy for transitioning to an event-driven or microservice architecture, this approach can be more appealing. All data is still managed in a central database, but we can organize our data in a way that more easily allows us to migrate it to a database-per-service model later if necessary. Furthermore, write throughput improves due to fewer indexes, while reads are fast because they are optimized for specific types of queries. This CQRS-style migration can serve as a beneficial stepping stone for identifying and handling events without taking on too much at once.

Finally, we can also choose to remove the CDC connector at some point and shift the event-driven aspect of the system up to the service layer. In this case, much of the heavy lifting associated with building an event-driven system will have already been completed.

Conclusion

Cloud providers offer numerous intriguing solutions related to event-driven infrastructure. If this is to be used as a core part of the system, we need to ensure we have the appropriate level of observability configured. Moreover, if the messaging infrastructure is specific to a certain cloud provider, it may mean that when developing in-house, we need to use different event infrastructure, which is far from ideal.

Event sourcing is extremely powerful and the most natural way to develop event-driven systems. For many organizations with existing products, it can be a significant mental shift and may prove overwhelming. Events must be treated as first-class citizens when adopting event sourcing. It is crucial that the architecture is understood by all stakeholders. There can be no compromise regarding the source of truth – it must be the event log. Backdoors to the database are not permitted. All data must pass through the event engine.

Change Data Capture can be a reasonable first step towards creating an event-driven system. The reality is that many organizations need several years to transition to an event-driven architecture while continuing with business as usual. CDC offers these organizations an opportunity to start thinking about the behavior of a system in terms of events while allowing the existing database to remain as the source of truth.