Pitfalls to avoid when adopting Microservices

Advances in hardware, bandwidth, and cloud infrastructure often lead to new software architectures. Designs and orchestrations not previously possible or cost effective can suddenly be leveraged. This leads to excitement from architects and developers alike.

But the introduction of microservices has captured the interest of a far wider audience than just architects and developers. The term is routinely thrown around outside of these circles and even sometimes at the executive level. Whether you view this as inappropriate or not, it's understandable. While there are many reasons why development teams adopt this type of architecture, other stakeholders are drawn to it because it promises to improve the speed at which features can be deployed to production, otherwise known as cycle time.

Speed to production

An overlapping goal of software architects and agile practitioners is to minimize dependencies. For scrum teams, this equates to being cross-functional, minimizing the reliance on individuals external to the team. In the case of architects and developers, it means reducing the coupling among components in a system, so that changes made to one area don't unnecessarily impact another. If we want to have any hope of reducing cycle time, reducing these types of dependencies is key.

Organizations generally have the best intentions when moving to a microservice architecture. There is no lack of research put into the topic, and budgets are readily made available for such transformations. However, as with many things, the devil is in the details.

Service Boundary Definition

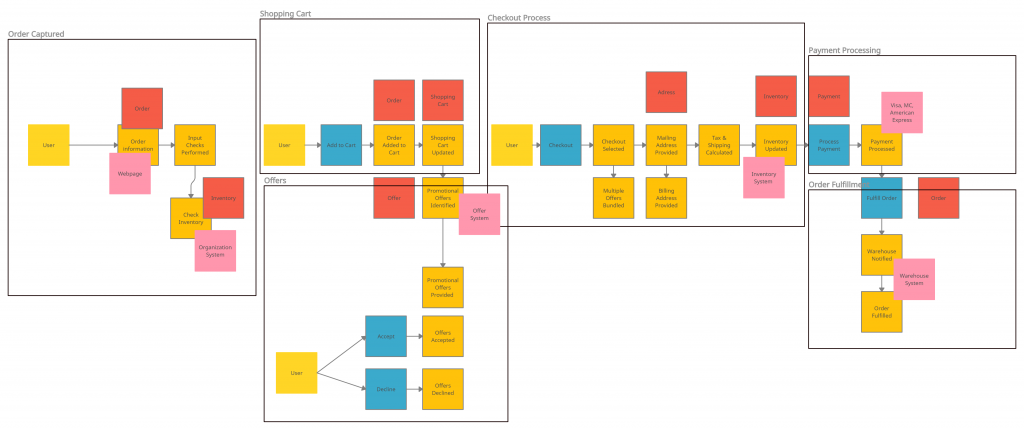

Many technical challenges exist when implementing this architecture, and developers tend to be focused on these. This is good, because distributing your system comes at a cost. Distributed systems must recover gracefully from network partitions, deal with eventual consistency, availability, message ordering, duplicates, retries, etc. But developers can be too quick to introduce new services without paying enough attention to their boundaries. Service names are announced right off the bat, often derived from database entities or existing domain languages familiar to them. Of course, this choice can be the right one, but if so, it is by chance. Services that do not have clear boundaries need to interact with other services more frequently in order for them to do anything useful. This results in unnecessary network traffic as well as some unwanted coupling between service APIs. There is now a coordination effort required when releasing software, which reduces a team's autonomy, which in turn reduces cycle time.

A popular way to address this shortfall is to use Domain-Driven Design techniques. Domain-driven design can dramatically reduce the complexity of a distributed system by focusing on identifying domains and subdomains of a system. The process focuses on defining what's known as bounded contexts around those subsystems. An effective workshop format for achieving this is called Event Storming. Event storming is a technique whereby developers and domain experts get together in an attempt to rapidly collaborate on the behavior of a system. Only after the behavior is modeled on a modeling surface will domains and sub-domains begin to reveal themselves.

Participants are asked to describe events that should happen in a system, placing them in chronological order on a modeling surface. The process can be somewhat chaotic at first but quickly starts to take shape after the initial few minutes as people get more comfortable with it. After a few hours, the modeling surface will start to tell a story and, most importantly, will have inevitably sparked conversations between participants in areas that are either not well understood or misunderstood. Domain experts will often have different ideas of how a system works. It is only when they work in a collaborative environment that gaps start to appear.

Most developers are aware of how difficult it is to name things appropriately. Naming services is no different, but this gets easier as the behavior is carved out on the modeling surface.

With such a design strategy in place, organizations can dramatically reduce communication between systems while getting a good start on potentially providing a database for each service.

Database per service

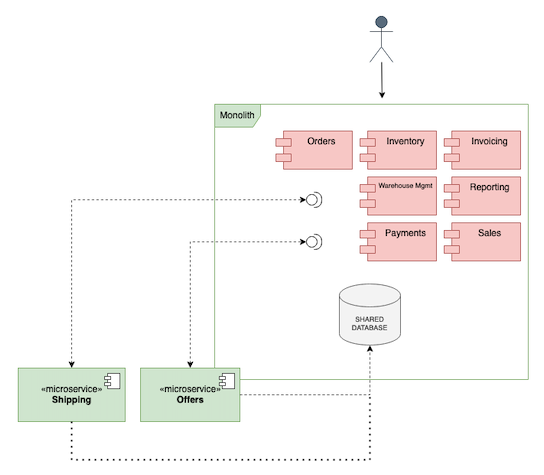

It is well known that the most difficult part of transitioning to a microservice architecture is breaking up the data. The data is the most valuable asset in any organization, and, as a result, a common approach to transitioning to a microservice architecture involves two phases:

- Create a number of microservices that will connect to a shared database

- Break up the shared database and allocate them to each service

The problem with this approach is that the first phase will almost certainly take a few years to implement. When the services are finally rolled out, there may be little or no budget (or desire) left to finish the transition. Many organizations will declare victory at this stage and declare that they have a microservice architecture, when in fact what they have is known as a service-based architecture.

This distinction may sound somewhat pedantic, but it has important consequences. The shared database can dramatically reduce a team's autonomy and the speed at which they can deliver code to production. This is further compounded if there is a lot of code in the database. As well as not being able to scale horizontally in a way that will make any meaningful difference, changing code by one team has a greater risk of affecting another team. Again, the coordination effort required to release to production goes against one of the main reasons to adopt this architecture, which is to have autonomous teams with a low cycle time.

If a shared database is the approach that is taken, it's vital that each stakeholder is aware of this as early as possible. They need to be made aware that the benefits that may have driven them to allocate budget and go with this architecture will not be fully realized until the database is broken up and distributed among services, which will likely take a number of years. However, if a commitment is made to follow through on the entire process, then this approach can be successful.

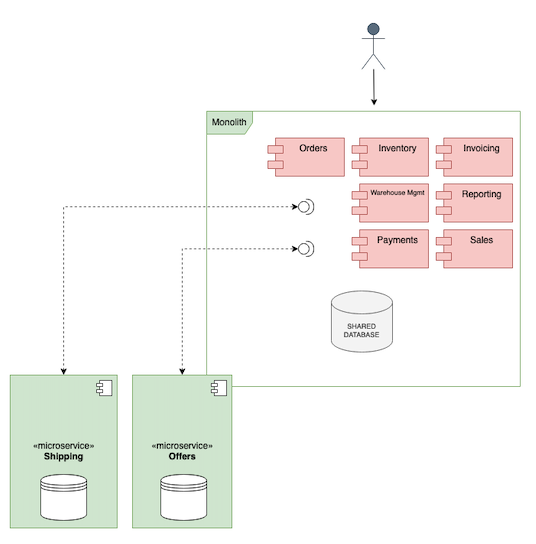

A safer way to transition from a monolith to a microservice architecture is to transition service and data at the same time.

Instead of the new microservices connecting to the shared database, the effort taken to separate data and create a new database per service is done at the same time as extracting the new service.

While both approaches will eventually yield the same results as above, it is harder to declare victory with this approach until the job is completely finished. As each functional area is migrated to a microservice, everyone is acutely aware of how much is done and how much is left to do. It also gives an organization an early start at attempting to move people around and form autonomous teams. Some data will be easier to break apart than others, and so it might be wise to start with such a service, rather than taking on some core product data up front with nothing to show for it for a few months. There is also an opportunity to extract the most problematic services first, thus relieving the business of some major performance issues earlier in the process. As with every decision in architecture, these decisions will depend on the state of play within the organization.

Conclusion

Transitioning to a microservice architecture is hard. There are many technical challenges for architects and developers to face. Distributing an application adds some inherent complexity, which can be mitigated by some proven techniques, such as incorporating domain-driven design techniques.

The recent shift to hybrid working has meant that the in-person, workshop format of event storming is not always possible. The digital tooling for these workshops has largely been absent, with facilitators using tools such as Miro or similar for this purpose. More recently, however, Michael Plöd from Innoq has created some great resources on the ddd-starter-modelling-process github account that help guide teams in many areas of Domain Driven Design.

Domo is an exciting new tool created by Vaughn Vernon. Vaughn is also the author of Implementing Domain-Driven Design

When creating a roadmap for such a transition, it is important to understand what stakeholders outside of IT circles expect from such a transformation. It is likely this will be related to performance improvements and releasing to production more regularly. Some approaches to migration are riskier than others, and may not produce the returns that these stakeholders expect. Paying close attention to whether you are willing to split up the database is a key concern here.